Reading Group Blog -- LSTMs Can Learn Syntax-Sensitive Dependencies Well, But Modeling Structure Makes Them Better (ACL 2018)

Robin Jia 01/25/2019

Welcome to the Stanford NLP Reading Group Blog! Inspired by other groups, notably the UC Irvine NLP Group, we have decided to blog about the papers we read at our reading group.

In this first post, we'll discuss the following paper:

Kuncoro et al. "LSTMs Can Learn Syntax-Sensitive Dependencies Well, But Modeling Structure Makes Them Better." ACL 2018.

This paper builds upon the earlier work of Linzen et al.:

Linzen et al. "Assessing the Ability of LSTMs to Learn Syntax-Sensitive Dependencies." TACL 2016.

Both papers address the question, "Do neural language models actually learn to model syntax?" As we'll see, the answer is yes, even for models like LSTMs that do not explicitly represent syntactic relationships. Moreover, models like RNN Grammars, which build representations based on syntactic structure, fare even better.

Subject-verb number agreement as an evaluation task

First, we must decide how to measure whether a language model has "learned to model syntax." Linzen et al. propose using subject-verb number agreement to quantify this. Consider the following four sentences:

- The key is on the table

- * The key are on the table

- * The keys is on the table

- The keys are on the table

Sentences 2 and 3 are invalid because the subject ("key"/"keys") disagree with the verb ("are"/"is") in number (singular/plural). Therefore, a good language model should give higher probability to sentences 1 and 4.

For these simple sentences, a simple heuristic can predict whether the singular or plural form of the verb is preferred (e.g., find the closest noun to the left of the verb, and check if it is singular or plural). However, this heuristic fails on more complex sentences. For example, consider:

The keys to the cabinet are on the table.

Here we must use the plural verb, even though the nearest noun ("cabinet") is singular. What matters here is not linear distance in the sentence, but syntactic distance: "are" and "keys" have a direct syntactic relationship (namely an nsubj arc). In general there may be many intervening nouns between the subject and verb ("The keys to the cabinet in the room next to the kitchen..."), making predicting the correct verb form very challenging. This is the key idea of Linzen et al.: we can measure whether a language model has learned about syntax by asking, How well does the language model predict the correct verb form on sentences where linear distance is a bad heuristic?

Note how convenient it is that this syntax-sensitive dependency exists in English: it allows us to draw conclusions about syntactic awareness of models that only make word-level predictions. Unfortunately, the downside is that this approach is limited to certain types of syntactic relationships. We might also want to see if language models can correctly predict where a prepositional phrase attaches, for example, but there is no analogue of number agreement involving prepositional phrases, so we cannot develop an analogous test.

LSTM language models learn syntax (but only if they are big enough)

Linzen et al. found that LSTM language models are not very good at predicting the correct verb form, in cases when linear distance is unhelpful. On a large test set of sentences from English Wikipedia, they measure how often the language model prefers to generate the verb with the correct form ("are", in the above example) over the verb with the wrong form ("is"). The language model is considered correct if

P("are" | "The keys to the cabinet") > P("is" | "The keys to the cabinet").

This is a natural choice, although another possibility is to let the language model see the entire sentence before predicting. In this regime, the model would be considered correct if

P("The keys to the cabinet are on the table") > P("The keys to the cabinet is on the table").

Here, the model gets to use both the left and right context when deciding the correct verb. This puts it on equal footing with, for example, a syntactic parser, which can look at the entire sentence and generate a full parse tree. On the other hand, you could argue that because LSTMs generate from left to right, whatever is on the right hand side is irrelevant to whether it generates the correct verb during generation.

Using the "left context only" definition of correctness, Linzen et al. find that the language model does okay on average, but it struggles on sentences in which there are nouns between the subject and verb with the opposite number as the subject (such as "cabinet" in the earlier example). The authors refer to these nouns as attractors. The language model does reasonably well (7% error) when there are no attractors, but this jumps to 33% error on sentences with one attractor, and a whopping 70% error (worse than chance!) on very challenging sentences with 4 attractors. In contrast, an LSTM trained specifically to predict whether an upcoming verb is singular or plural is much better, with only 18% error when 4 attractors are present. Linzen et al. conclude that while the LSTM architecture can learn about these long-range syntactic cues, the language modeling objective forces it to spend a lot of model capacity on other things, resulting in much worse error rates on challenging cases.

However, Kuncoro et al. re-examine these conclusions, and find that with careful hyperparameter tuning and more parameters, an LSTM language model can actually do a lot better. They use a 350-dimensional hidden state (as opposed to 50-dimensional from Linzen et al.) and are able to get 1.3% error with 0 attractors, 3.0% error with 1 attractor, and 13.8% error with 4 attractors. By scaling up LSTM language models, it seems we can get them to learn qualitatively different things about language! This jives with the work of Melis et al., who found that careful hyperparameter tuning makes standard LSTM language models outperform many fancier models.

Language model variants

Next, Kuncoro et al. examine variants of the standard LSTM word-level language model. Some of their findings include:

- A language model trained on a different dataset (1 Billion word benchmark, which is mostly news instead of Wikipedia) does slightly worse across the board, but still learns some syntax (20% error with 4 attractors)

- A character-level language model does about the same with 0 attractors, but is worse than the word-level model as more attractors are added (6% error as opposed to 3% with 1 attractor; 27.8% error as opposed to 13.8% with 4 attractors). When many attractors are present, the subject is very far away from the verb in terms of number of characters, so the character-level model struggles.

But the most important question Kuncoro et al. ask is whether incorporating syntactic information during training can actually improve language model performance at this subject-verb agreement task. As a control, they first try keeping the neural architecture the same (still an LSTM) but change the training data so that the model is trying to generate not only words in a sentence but also the corresponding constituency parse tree. They do this by linearizing the parse tree via a depth-first pre-order traversal, so that a tree like

becomes a sequence of tokens like

["(S", "(NP", "(NP", "The", "keys", ")NP", "(PP", "to", "(NP", ...]

The LSTM is trained just like a language model to predict sequences of tokens like these. At test time, the model gets the whole prefix, consisting of both words and parse tree symbols, and predicts what verb comes next. In other words, it computes

P("are" | "(S (NP (NP The keys )NP (PP to (NP the table )NP )PP )NP (VP").

You may be wondering where the parse tree tokens come from, since the datsaet is just a bunch of sentences from Wikipedia with no associated gold-labeled parse trees. The parse trees were generated with an off-the-shelf parser. The parser gets to look at the whole sentence before predicting a parse tree, which technically leaks information about the words to the right of the verb--we'll come back to this concern in a little bit.

Kuncoro et al. find that a plain LSTM trained on sequences of tokens like this does not do any better than the original LSTM language model. Changing the data alone does not seem to force the model to actually get better at modeling these syntax-sensitive dependencies.

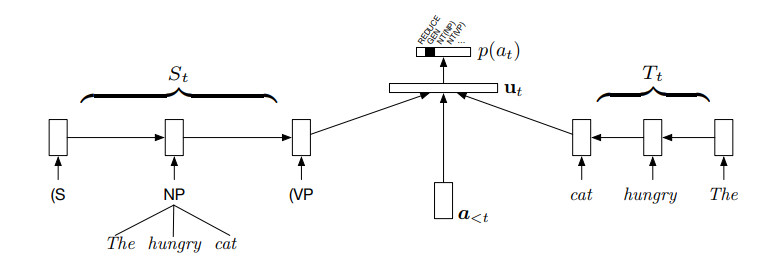

Next, the authors additionally change the model architecture, replacing the LSTM with an RNN Grammar. Like the LSTM that predicts the linearized parse tree tokens, the RNN Grammar also defines a joint probability distribution over sentences and their parse trees. But unlike the LSTM, the RNN Grammar uses the tree structure of words seen so far to build representations of constituents compositionally. The figure below shows the RNN Grammar architecture:

On the left is the stack, consisting of all constituents that have either been opened or fully created. The embedding for a completed constituent ("The hungry cat") is created by composing the embeddings for its children, via a neural network. An RNN then runs over the stack to generate an embedding of the current stack state. This, along with a representation of the history of past parsing actions \(a_{<t}\) is used to predict the next parsing action (i.e. to generate a new constituent, complete an existing one, or generate a new word). The RNN Grammar variant used by Kuncoro et al. ablates the "buffer" \(T_t\) on the right side of the figure.

The compositional structure of the RNN Grammar means that it is naturally encouraged to summarize a constituent based on words that are closer to the top-level, rather than words that are nested many levels deep. In our running example, "keys" is closer to the top level of the main NP, whereas "cabinet" is nested within a prepositional phrase, so we expect the RNN Grammar to lean more heavily on "keys" when building a representation of the main NP. This is exactly what we want in order to predict the correct verb form! Empirically, this inductive bias towards using syntactic distance helps with the subject-verb agreement task: the RNN Grammar gets only 9.4% error on sentences with 4 attractors. Using syntactic information at training time does make language models better at predicting syntax-sensitive dependencies, but only if the model architecture makes smart use of the available tree structure.

As mentioned earlier, one important caveat is that the RNN Grammar gets to use the predicted parse tree from an external parser. What if the predicted parse of the prefix leaks information about the correct verb? Moreover, reliance on an external parser also leaks information from another model, so it is unclear whether the RNN Grammar itself has really "learned" about these syntactic relationships. Kuncoro et al. address these objections by re-running the experiments using a predicted parse of the prefix generated by the RNN Grammar itself. They use a beam search method proposed by Fried et al. to estimate the most likely parse tree structure, according to the RNN Grammar, for the words before the verb. This predicted parse tree fragment is then used by the RNN Grammar to predict what the verb should be, instead of the tree generated by a separate parser. The RNN Grammar still does well in this setting; in fact, it does somewhat better (7.1% error with four attractors present). In short, the RNN Grammar does better than the LSTM baselines at predicting the correct verb, and it does so by first predicting the tree structure of the words before the verb, then using this tree structure to predict the verb itself.

(Note: a previous version of this post incorrectly claimed that the above experiments used a separate incremental parser to parse the prefix.)

Wrap-Up

Neural language models with sufficient capacity can learn to capture long-range syntactic dependencies. This is true even for very generic model architectures like LSTMs, though models that explicitly model syntactic structure to form their internal representations are even better. We were able to quantify this by leveraging a particular type of syntax-sensitive dependency (subject-verb number agreement), and focusing on rare and challenging cases (sentences with one or more attractors), rather than the average case which can be solved heuristically.

There are many details I've omitted, such as a discussion in Kuncoro et al. of alternative RNN Grammar configurations. Linzen et al. also explore other training objectives besides just language modeling.

If you've gotten this far, you might also enjoy these highly related papers:

- Gulordava et al. "Colorless green recurrent networks dream hierarchically." NAACL 2018. This paper actually came out a bit before Kuncoro et al., and has similar findings regarding LSTM size. But the main point of this paper is to determine whether the LSTM is actually learning syntax, or if it is using collocational/frequency-based information. For example, given "dogs in the neighborhood often bark/barks," knowing that barking is something that dogs can do but neighborhoods can't is sufficient to guess the correct form. To test this, they construct a new test set where content words are replaced with other content words of the same type, resulting in nonce sentences with equivalent syntax. The LSTM language models do somewhat worse with this data but still quite well, again suggesting that they do learn about syntax.

- Yoav Goldberg. "Assessing BERT's Syntactic Abilities". With the recent success of BERT, a natural question is whether BERT learns these same sorts of syntactic relationships. Impressively, it does very well on the verb prediction task, getting 3-4% error rates across the board for 1, 2, 3, or 4 attractors. It's worth noting that for various reasons, these numbers are not directly comparable with the numbers in the rest of the post (both due to BERT seeing the whole sentence and for data processing reasons).