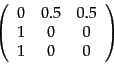

A Markov chain is characterized by an ![]() transition probability matrix

transition probability matrix ![]() each of whose entries is in the interval

each of whose entries is in the interval ![]() ; the entries in each row of

; the entries in each row of ![]() add up to 1. The Markov chain can be in one of the

add up to 1. The Markov chain can be in one of the ![]() states at any given time-step; then, the entry

states at any given time-step; then, the entry ![]() tells us the probability that the state at the next time-step is

tells us the probability that the state at the next time-step is ![]() , conditioned on the current state being

, conditioned on the current state being ![]() . Each entry

. Each entry ![]() is known as a transition probability and depends only on the current state

is known as a transition probability and depends only on the current state ![]() ; this is known as the Markov property. Thus, by the Markov property,

; this is known as the Markov property. Thus, by the Markov property,

| (251) |

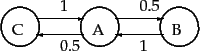

In a Markov chain, the probability distribution of next states for a Markov chain depends only on the current state, and not on how the Markov chain arrived at the current state. Figure 21.2 shows a simple Markov chain with three states. From the middle state A, we proceed with (equal) probabilities of 0.5 to either B or C. From either B or C, we proceed with probability 1 to A. The transition probability matrix of this Markov chain is then

|

(253) |

|

A Markov chain's probability distribution over its states may be viewed as a probability vector : a vector all of whose entries are in the interval ![]() , and the entries add up to 1. An

, and the entries add up to 1. An ![]() -dimensional probability vector each of whose components corresponds to one of the

-dimensional probability vector each of whose components corresponds to one of the ![]() states of a Markov chain can be viewed as a probability distribution over its states. For our simple Markov chain of Figure 21.2 , the probability vector would have 3 components that sum to 1.

states of a Markov chain can be viewed as a probability distribution over its states. For our simple Markov chain of Figure 21.2 , the probability vector would have 3 components that sum to 1.

We can view a random surfer on the web graph as a Markov chain, with one state for each web page, and each transition probability representing the probability of moving from one web page to another. The teleport operation contributes to these transition probabilities. The adjacency matrix ![]() of the web graph is defined as follows: if there is a hyperlink from page

of the web graph is defined as follows: if there is a hyperlink from page ![]() to page

to page ![]() , then

, then ![]() , otherwise

, otherwise ![]() . We can readily derive the transition probability matrix

. We can readily derive the transition probability matrix ![]() for our Markov chain from the

for our Markov chain from the ![]() matrix

matrix ![]() :

:

We can depict the probability distribution of the surfer's position at any time by a probability vector ![]() . At

. At ![]() the surfer may begin at a state whose corresponding entry in

the surfer may begin at a state whose corresponding entry in ![]() is 1 while all others are zero. By definition, the surfer's distribution at

is 1 while all others are zero. By definition, the surfer's distribution at ![]() is given by the probability vector

is given by the probability vector ![]() ; at

; at ![]() by

by

![]() , and so on. We will detail this process in Section 21.2.2 . We can thus compute the surfer's distribution over the states at any time, given only the initial distribution and the transition probability matrix

, and so on. We will detail this process in Section 21.2.2 . We can thus compute the surfer's distribution over the states at any time, given only the initial distribution and the transition probability matrix ![]() .

.

If a Markov chain is allowed to run for many time steps, each state is visited at a (different) frequency that depends on the structure of the Markov chain. In our running analogy, the surfer visits certain web pages (say, popular news home pages) more often than other pages. We now make this intuition precise, establishing conditions under which such the visit frequency converges to fixed, steady-state quantity. Following this, we set the PageRank of each node ![]() to this steady-state visit frequency and show how it can be computed.

to this steady-state visit frequency and show how it can be computed.